By Daniela Rus, PhD, Director of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL)

Autonomous vehicles—or AVs—have not had an easy ride. Few doubt the extraordinary potential of self-driving vehicles. As Lawrence D. Burns, GM’s past tech exec and consultant on Google’s driverless cars, states in his just-released Autonomy: The Quest to Build the Driverless Car, self-driving cars “will transform the way we live, the way we get around, and the way we do business.” How do we get there? By increasing the level of autonomy self-driving vehicles can achieve. That’s precisely what we’re working to do at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

Today, a car crash occurs every 5 seconds in the United States.[1] Globally, road traffic injuries are the eighth leading cause of death, with about 1.24 million lives lost every year.[2] In addition to this terrible human cost, these crashes take an enormous economic toll. The National Highway Traffic Safety Administration has calculated the economic cost in the United States at about $277B a year.[3] Putting a dent in these numbers is an enormous challenge, and self-driving vehicles seem to be the only real solution.

The good news is that technology is being developed today that will give cars the ability to learn how to drive safely and reliably in the not-too-distant future. Newly developed methods for end-to-end learning are enabling AVs to work in simple situations some of the time. Machine learning is helping to create a collective “brain” that can continuously learn not only how to operate a vehicle safely, but how to avoid the mistakes we humans make every day. And machine knowledge might even be used in the future to enable the cars we drive to become our trusted partners, helping us navigate tricky roads, correcting our mistakes when we’re tired, and even reminding us to make that forgotten call to Mom that we promised to make—yesterday. The Holy Grail of delivering truly autonomous self-driving cars is almost within our reach.

The bad news is that the path toward that Holy Grail has many bumps in the road. A handful of highly publicized accidents have made people question if AVs can really deliver the safety they promise, and street tests haven’t done anything to endear self-driving vehicles to the public. The latest: Alphabet’s new Waymo vehicles have made headlines by irritating local drivers by blocking traffic and hesitating to make maneuvers, such as merges and unprotected left turns, that are easily made by humans.

But even the bad news has a flip side. While the challenges are undeniable, continuous learning is, by nature, constantly improving. As these technologies evolve, so will the solutions they are able to deliver. Autonomous vehicles will learn to do things better, and they will make our roads safer by eliminating human error.

Car manufacturers get it. The Waymo car has been lauded for driving several million accident-free miles. Mercedes already has a prototype S-Class Autonomous car. Toyota is developing a car that “will never be responsible for a collision” and has invested $1B to advance artificial intelligence. Nissan has promised self-driving cars by 2020. And just last month, Toyota announced a $500M investment in Uber to develop self-driving Toyota Sienna minivans that use Uber’s autonomous technology—all by 2021.

The race to full autonomy is on and, so far, there is no clear winner. That’s precisely why it is wise for investors to seek opportunities across the entire supply chain and to focus on enabling technologies such as sensing, geo positioning, and AI. These and other key technologies will be mandatory for tomorrow’s AV systems, regardless of who ends up leading the pack toward the ultimate goal: Level 5 autonomy.

Defining Autonomy

The dictionary defines ‘autonomy’ as “a state of self-governing.” Robot autonomy refers to the ability of a machine to take input form the world through its sensors, reason about the input, make decisions, and act without human intervention. When talking about autonomous vehicles, there is a broad spectrum of autonomy that defines a vehicle’s actual capabilities. To understand where all the various advances fall, it is useful to look at The National Highway Traffic Safety Administration’s (NHTSA) classification of 5 levels of autonomy:

- Level 1: Provides feedback to the human driver (example: rear-facing cameras)

- Level 2: Provides localized, active control (example: antilock breaks)

- Level 3: Includes some level of autonomy, but the human must be ready to take over (example: Tesla’s autopilot)

- Level 4: Includes autonomy in some places, some of the time

- Level 5: Includes autonomy in all environments, all of the time

The true Holy Grail is Level 5 autonomy. To get there, researchers, including our own team at CSAIL, are pushing the envelope along each of three axes: vehicle speed, complexity of the environment, and the complexity of interactions with things in motion, such as people, bicyclists, and other cars.

How close are we? Most of today’s technology supports Level 4 deployments: vehicles at low speeds in low complexity environments with low levels of interaction. Level 4 is most appropriate (and safe) on private roads, such as in retirement communities and school campuses, or on low-congestion public roads. But there are still many situations that technology is not yet able to address effectively. Traffic congestion, high speeds, inclement weather (rain, snow), unpredictable human drivers, remote areas with no high-density maps, and navigating “corner cases” (extreme situations outside the normal learning parameters). All of these present challenges that require a higher level of machine learning.

Today’s self-driving vehicles are able to complete massive amounts of simultaneous computations, crunch huge quantities of data, and run intricate algorithms in real time. These technologies have taken us to a point in time where we can realistically discuss the future of full autonomy on the roads. The next milestone, driving autonomously for long stretches of highway, is almost within reach. But on the road to true Level 5 autonomy, there is still plenty of work ahead.

Building a Map to the Future

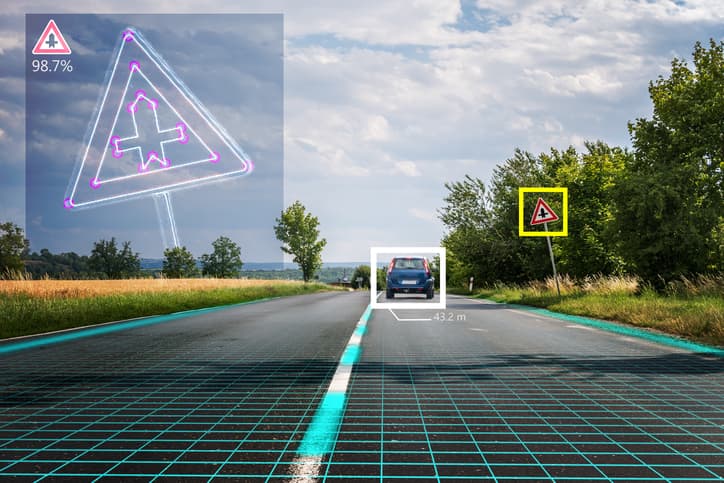

How do we get from where we are today to the Holy Grail of Level 5 autonomy? To achieve that goal, we are working to bring in the human-like elements needed to advance today’s technology to Level 5 capabilities. Machines can already outperform humans in many ways, such as estimating with great accuracy how quickly another vehicle is moving. What they still lack, however, is the ability to “see” like the human eye, to effectively recognize objects and situations and make sense of them in the real world. While we spend our whole lives learning how to observe the world and make sense of it, machines require algorithms to do this, and data—lots and lots (and lots!) of data, all annotated to translate what each thing being perceived actually means. To make Level 5 autonomy possible, we have to develop new algorithms that help them learn from far fewer examples in an unsupervised way, without constant human intervention.

To enable today’s self-driving vehicles to operate effectively without Level 5 autonomy, map-based solutions provide a stopgap. To begin, a vehicle drives on every possible road segment and makes a map in feature space. That map provides autonomous vehicles with the data needed to navigate each ride, including planning a path from point A to point B and localizing vehicles as it moves along the designated route. This is why most self-driving car companies only test their fleets in certain major cities where they’ve developed detailed 3D maps that are meticulously labeled with the exact positions of traffic lanes, curbs, stop signs, and more. This detailed mapping includes environmental features detected by the sensors of the vehicle. Maps are created using 3D LIDAR systems that rely on light to scan the local space, accumulating millions of data points and extracting the features defining each place.

While the mapping system has served its purpose of getting AVs on the road, the reliance on detailed pre-built maps is a problem. To achieve the goal of universal acceptance, AVs must be able to drive in environments where maps do not exist. On the millions of miles of roads that are unpaved. On unlit or unreliably marked streets. Anywhere and everywhere a person would want to drive.

The Road to Full Autonomy

At MIT, our CSAIL team has been developing a solution to serve as a first step for enabling self-driving cars to navigate unmapped roads using only GPS and sensors. Called MapLite, our system combines GPS data (similar to what is available today on Google Maps) with data taken from LIDAR sensors. Working in concert, these two elements allow a car to drive autonomously on unpaved country roads and reliably detect what’s ahead more than 100 feet in advance. Other researchers have also been working on mapless approaches, using both perception sensors like LIDAR and vision-based approaches, with varying degrees of success.

Anywhere, anytime autonomy is still some years away, and technology isn’t the only barrier. While progress has been significant on the technical side, getting policy to catch up has been an understandably complex and incremental process. Policymakers have yet to decide how autonomous vehicles should be regulated, including what kinds of vehicles should be allowed on the road, who is allowed to operate them, how they should safety be tested, and how liability should be managed. They are still wading through the implications of a potential patchwork of state-by-state laws and regulations and determining the impact of harmonizing these policies. And they are just beginning to search for the most effective ways to encourage AV adoption, such as smart road infrastructure, dedicated highway lanes, and manufacturer or consumer incentives.

These are complex issues, but as policymakers continue to refine the details on their end, researchers are pushing the boundaries and driving change at lightning speed. Level 4 autonomy is already here, and the Holy Grail of Level 5 isn’t far behind. I have no doubt that, very soon, self-driving cars “will transform the way we live, the way we get around, and the way we do business.” Our team at CSAIL is working to make safe, reliable, and universally accepted self-driving cars a reality as soon as possible.

About the Author

A member of the ROBO Global Strategic Advisory Board, Daniela Rus is the Director of CSAIL at MIT. She serves as the Director of the Toyota-CSAIL Joint Research Center and is a member of the science advisory board of the Toyota Research Institute.

Rus’s research interests are in robotics and artificial intelligence. The recipient of the 2017 Engelberger Robotics Award from the Robotics Industries Association, she is also a Class of 2002 MacArthur Fellow, a fellow of ACM, AAAI and IEEE, and a member of the National Academy of Engineering and the American Academy of Arts and Sciences. Daniela earned her PhD in Computer Science from Cornell University.

[1] https://www.osha.gov/Publications/motor_vehicle_guide.pdf

[2] http://apps.who.int/iris/bitstream/10665/83789/1/WHO_NMH_VIP_13.01_eng.pdf?ua=1

[3] From autonomous driving ppt